MOWA is a practical multiple-in-one image warping framework, particularly in computational photography, where six distinct tasks are considered. Compared to previous works tailored to specific tasks, our method can solve various warping tasks from different camera models or manipulation spaces in a single framework. It also demonstrates an ability to generalize to novel scenarios, as evidenced in both cross-domain and zero-shot evaluations.

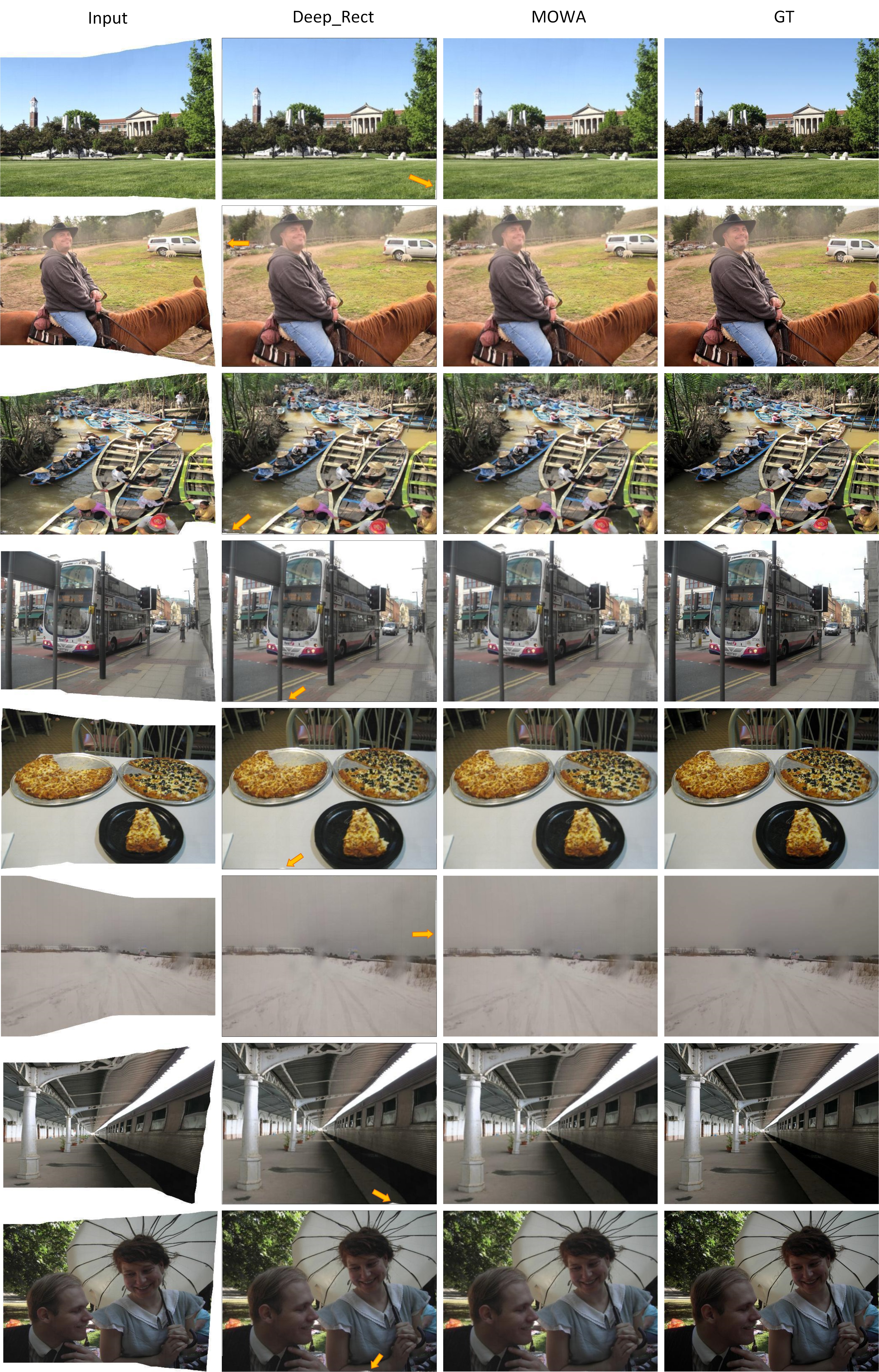

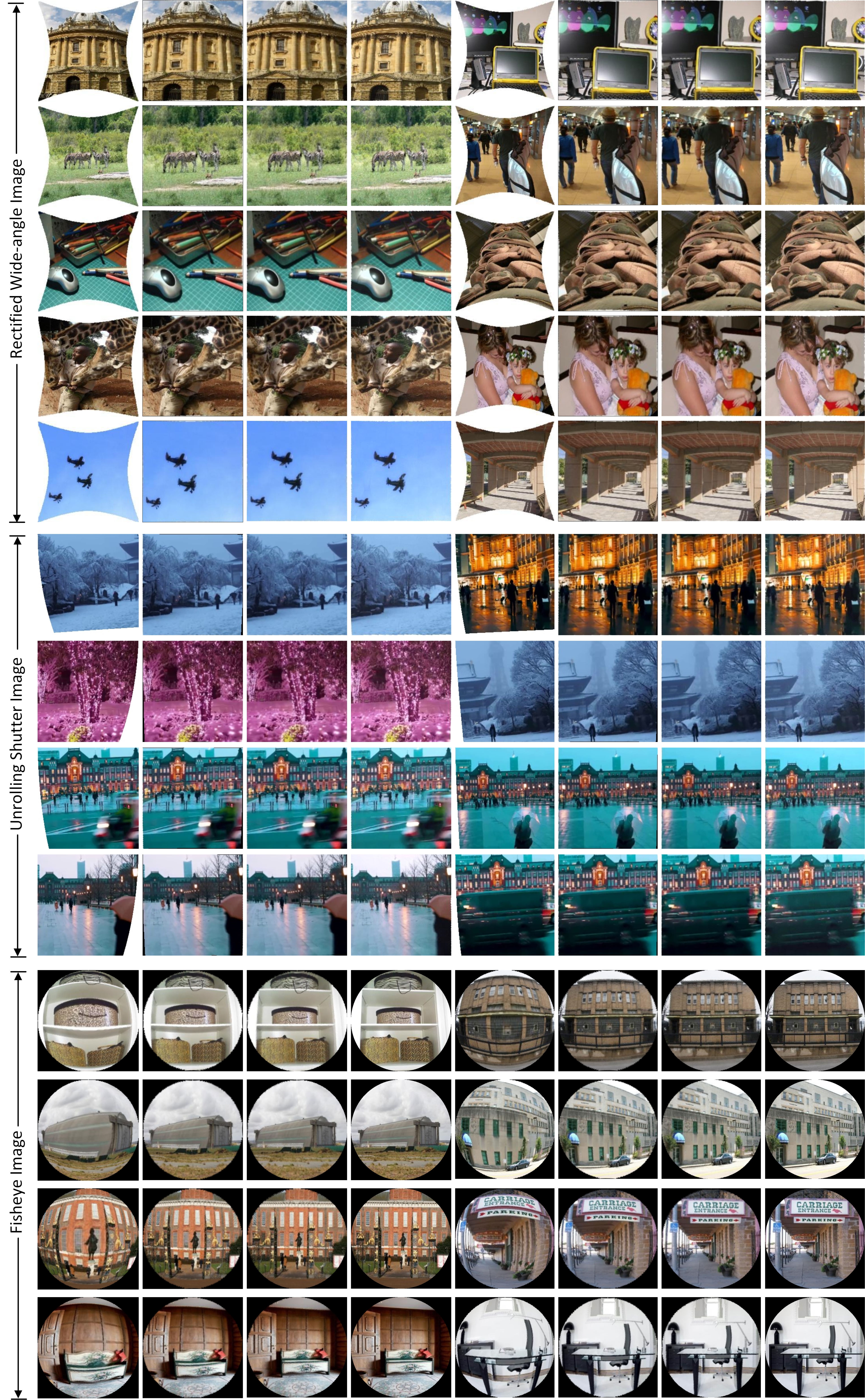

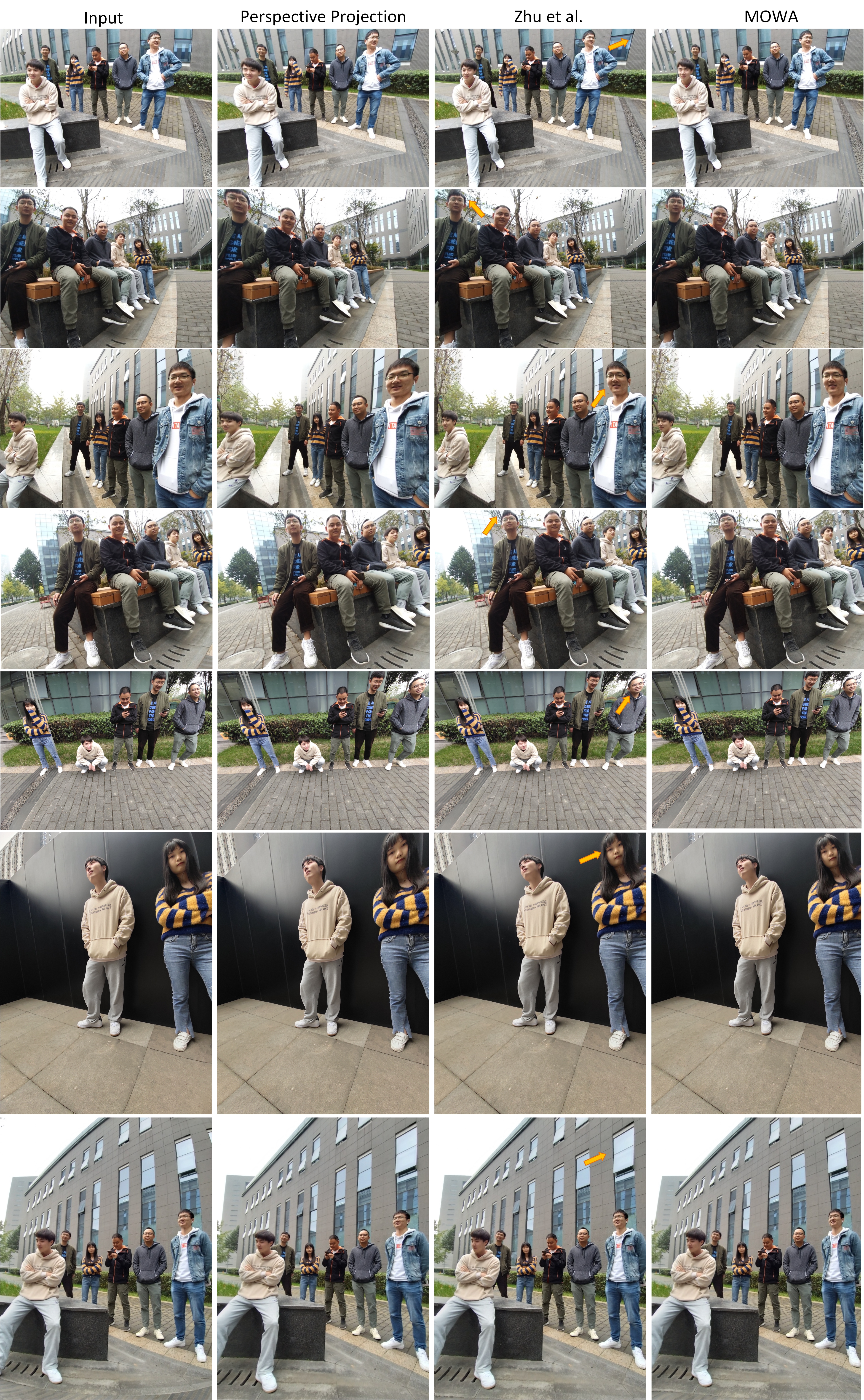

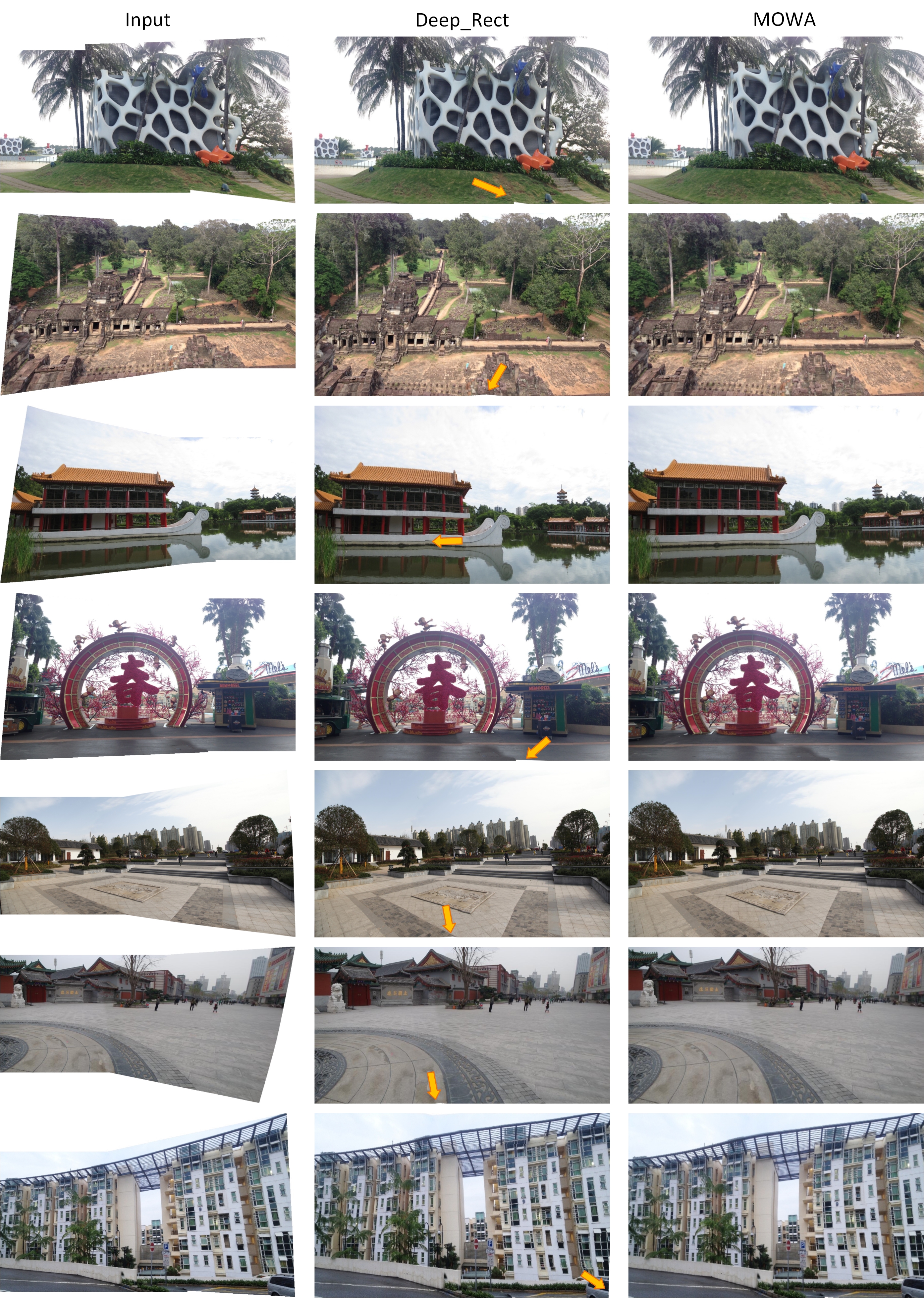

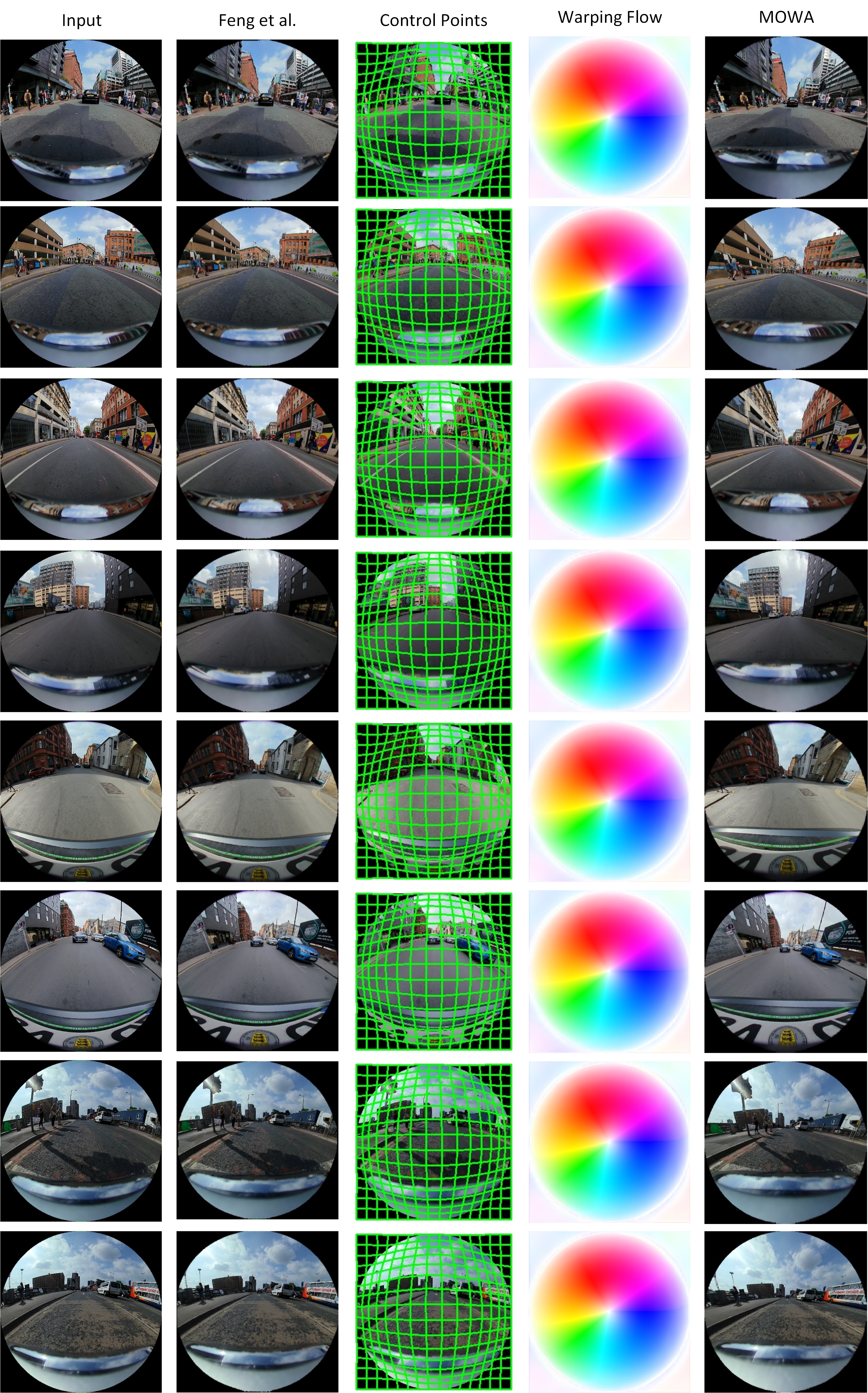

Results on Test Datasets. Qualitative comparison of our multiple-in-one framework MOWA to the SOTA image warping models: The red dotted lines mark the horizon, and the arrows highlight the inferior warped parts such as the irregular boundaries and distorted semantics.

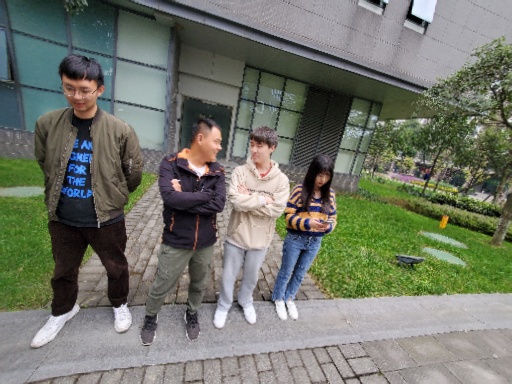

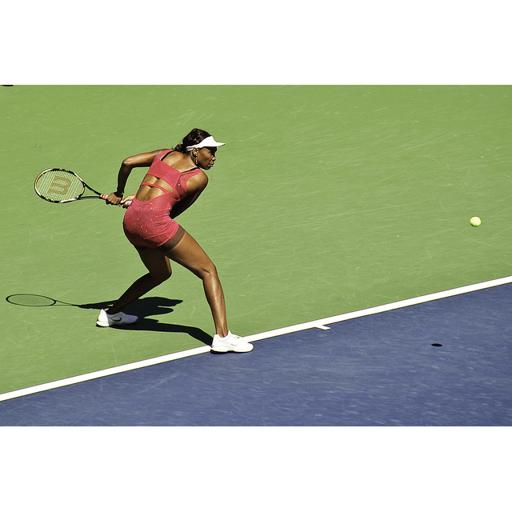

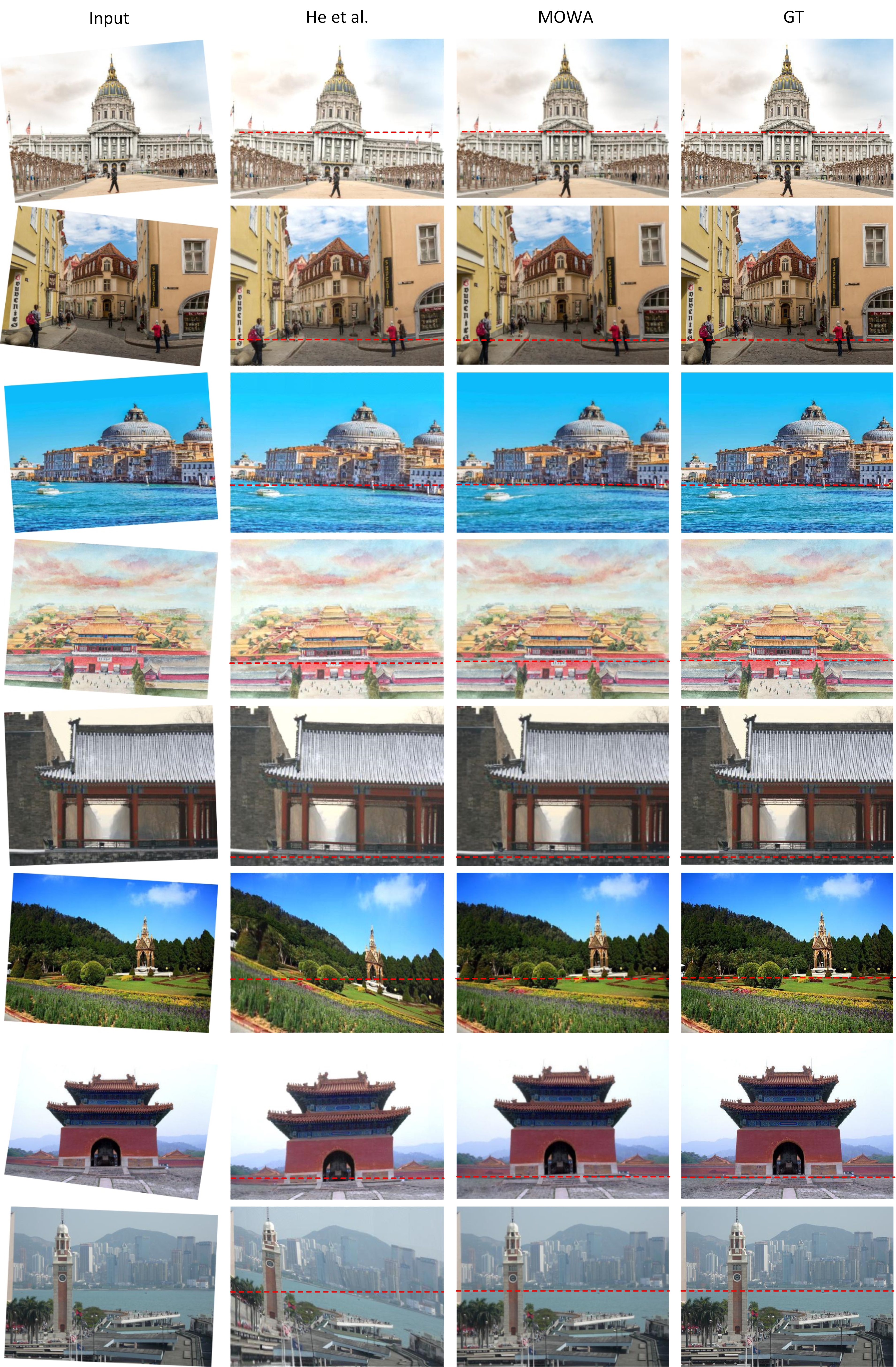

Cross-Domain and Zero-Shot Evaluations. Generalization evaluation of the proposed MOWA: cross-domain evaluation (top) and zero-shot evaluation (bottom). In image retargeting results, the red dotted lines measure the stretch extent of the face or body by warping operations.

Warping flow bridges the geometric gap between the input and target images. It can typically be visualized using a colormap, where the color hue represents the flow direction and the saturation signifies the flow magnitude. We provide an interactive visualization to show the MOWA's estimated displacement of each pixel. Please hover over the warping flows or input images to see how the arrow changes and where the point is warped. For mobile users, please try to tag and drag the pixels. All examples are resized into the same resolution of 512x512 for compact display.

Stitched Images

Warping Flow

(Hover over me)

Input Image

(Hover over me)

Warped Result

Warping Flow

(Hover over me)

Input Image

(Hover over me)

Warped Result

Warping Flow

(Hover over me)

Input Image

(Hover over me)

Warped Result

Rectified Wide-Angle Images

Warping Flow

(Hover over me)

Input Image

(Hover over me)

Warped Result

Warping Flow

(Hover over me)

Input Image

(Hover over me)

Warped Result

Warping Flow

(Hover over me)

Input Image

(Hover over me)

Warped Result

Unrolling Shutter Images

Warping Flow

(Hover over me)

Input Image

(Hover over me)

Warped Result

Warping Flow

(Hover over me)

Input Image

(Hover over me)

Warped Result

Warping Flow

(Hover over me)

Input Image

(Hover over me)

Warped Result

Rotated Images

Warping Flow

(Hover over me)

Input Image

(Hover over me)

Warped Result

Warping Flow

(Hover over me)

Input Image

(Hover over me)

Warped Result

Warping Flow

(Hover over me)

Input Image

(Hover over me)

Warped Result

Fisheye Images

Warping Flow

(Hover over me)

Input Image

(Hover over me)

Warped Result

Warping Flow

(Hover over me)

Input Image

(Hover over me)

Warped Result

Warping Flow

(Hover over me)

Input Image

(Hover over me)

Warped Result

Portrait Photos

Warping Flow

(Hover over me)

Input Image

(Hover over me)

Warped Result

Warping Flow

(Hover over me)

Input Image

(Hover over me)

Warped Result

Warping Flow

(Hover over me)

Input Image

(Hover over me)

Warped Result

Image Retargeting

Warping Flow

(Hover over me)

Input Image

(Hover over me)

Warped Result

Warping Flow

(Hover over me)

Input Image

(Hover over me)

Warped Result

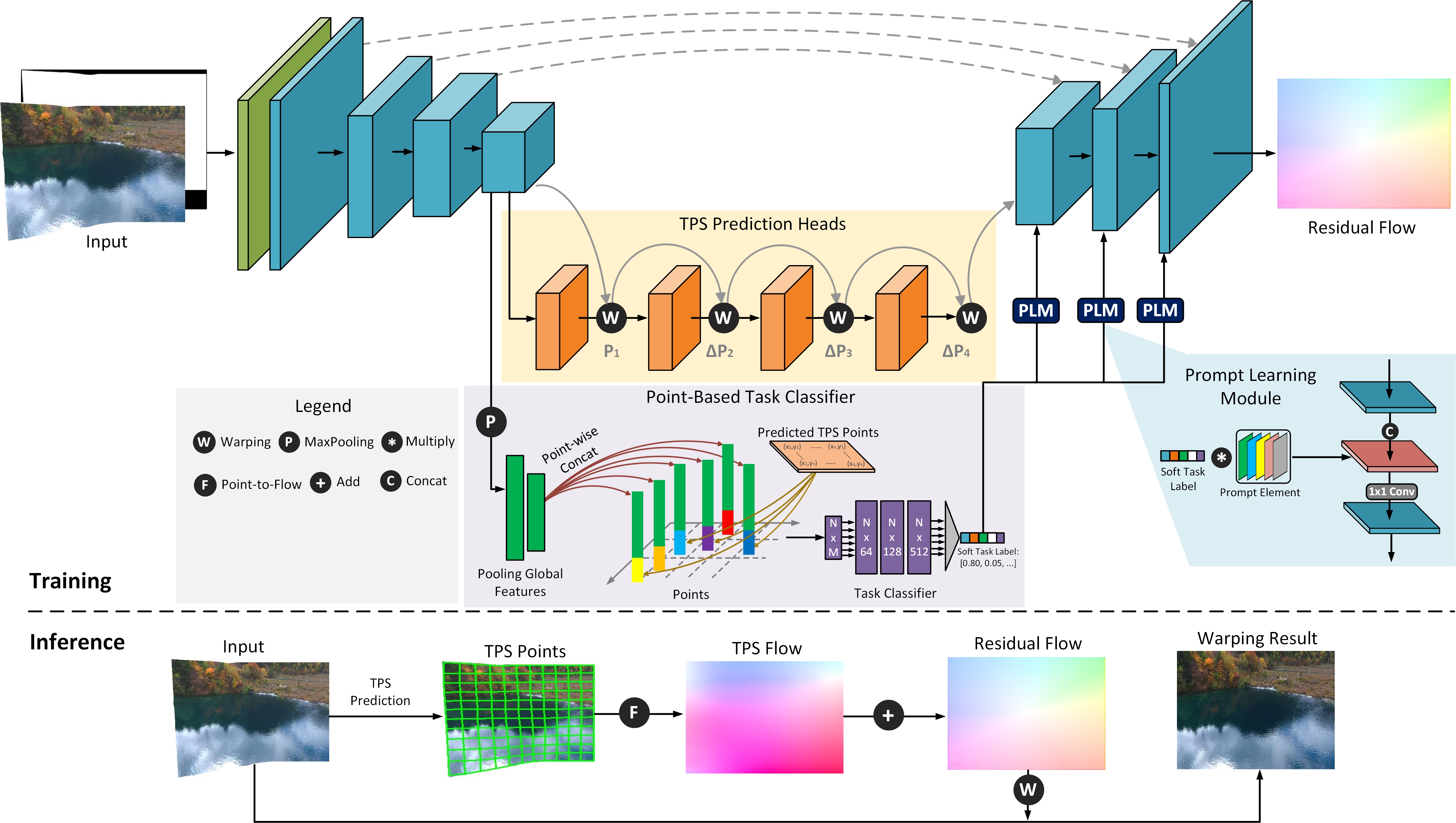

Framework. To mitigate the difficulty of multi-task learning, we propose to decouple the motion estimation in both the region level (TPS control points with increasing refined numbers) and pixel level (residual flow). Additionally, a prompt learning module, guided by a lightweight point-based classifier, is designed to facilitate task-aware image warpings.

TPS Control Point. The TPS transformation stands out for its remarkable flexibility to model complex motions. It is adept at performing image warping based on two sets of region-level control points. We visualize the estimation results of control points from different levels and progressive warping results as follows.

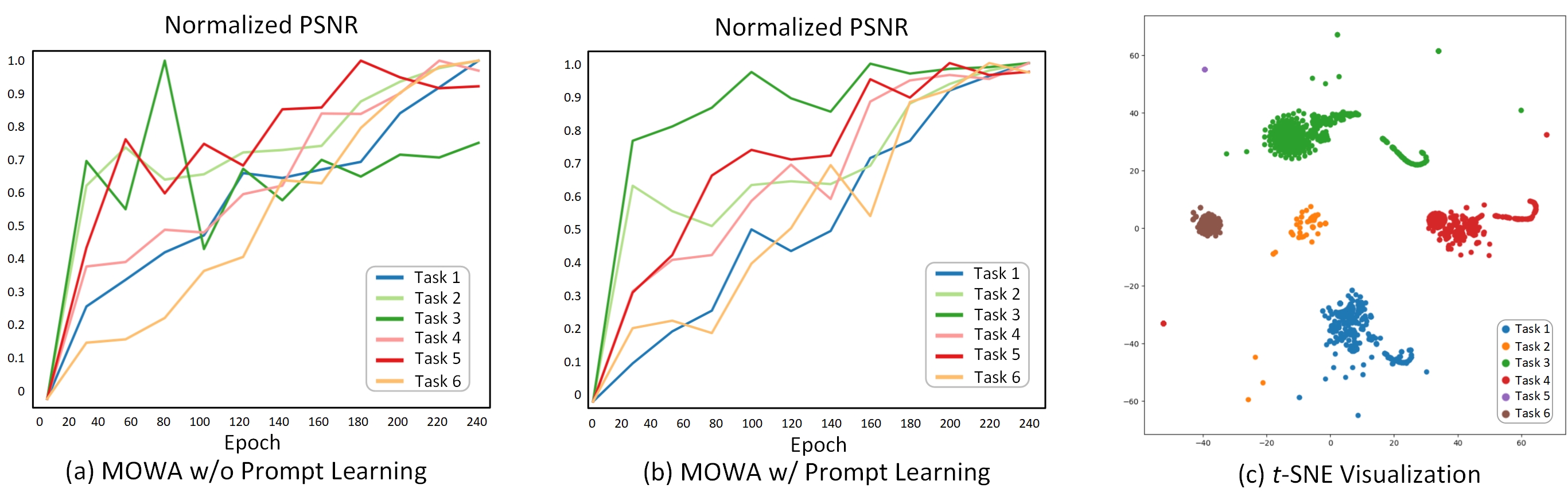

Prompt Learning. To dynamically boost the task-aware image warping in a single model, a prompt learning module is presented in MOWA. The performance conflicts of different tasks can be relieved by the proposed prompt learning. All tasks show a similar improvement trend as the training epoch increases, and the framework achieves the unified and best warping performance on all tasks at the end of training epochs. (Recap. Task 1: stitched images, Task 2: rectified wide-angle images, Task 3: unrolling shutter images, Task 4: rotated images, Task 5: fisheye images, Task 6: portrait photos.)

Stitched Images

Rotated Images

Rectified Wide-Angle, Unrolling Shutter, and Fisheye Images

(For each comparison, we show the input image, results of comparison methods and MOWA, and ground truth from left to right.)

Portrait Photos

Stitched Images (Cross-Domain)

Fisheye Images (Cross-Domain)

Portrait Photos (Cross-Domain)

Image Retargeting (Zero-Shot)

[1] Bookstein, Fred L., “Principal Warps: Thin-Plate Splines and the Decomposition of Deformations”, TPAMI 1989.

[2] Kaiming He, Huiwen Chang, Jian Sun, “Rectangling Panoramic Images via Warping”, SIGGRAPH 2013.

[3] Daniel Geng, “Flow Web Visualization”.

@article{liao2024mowa,

author = {Kang, Liao and Zongsheng, Yue and Zhonghua, Wu and Chen Change Loy},

title = {MOWA: Multiple-in-One Image Warping Model},

journal = {arXiv preprint arXiv:2404.10716},

year = {2024},

}